Ch. 8 Memory-management strategies – Flashcards

Unlock all answers in this set

Unlock answersquestion

The only general-purpose storage that the CPU can access directly (3)

answer

• main memory - accessed via a transaction on the memory bus. • CPU cache - between CPU and main memory - smaller, faster memory which stores copies of the data from frequently used main memory locations. • the registers built into the processor itself - generally accessible within 1 cycle of the CPU clock.

question

base register VS limit register

answer

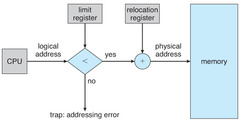

- A pair of base and limit registers define the logical address space - CPU must check every memory access generated in user mode to be sure it is between base and limit for that user • The *base* register holds the smallest legal physical memory address. • The *limit* registers specifies the size of the range.

question

Address binding stages (3)

answer

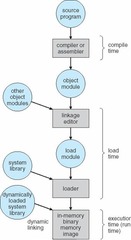

Binding means mapping from one address space to another. The binding of instructions and data to memory addresses can be done at any step along the way: • *Compile time* - if it is *known* at compile time where the process will reside in memory, then *absolute code* will be generated. - must recompile code if starting location changes • *Load time* - if it is *not known* at compile time where the process will reside in memory, then the compiler must generate *relocatable code*. In this case, final binding is delayed until load time. - if the starting address changes, we need only reload the user code to incorporate this changed value. • *Execution time* - if the process can be moved during its execution from one memory segment to another, then binding must be delayed until run time. - Need hardware support for address maps (e.g., base and limit registers) - used by most general operating systems - logical address ≠ physical address

question

logical = virtual VS physical address space. MMU and relocation register.

answer

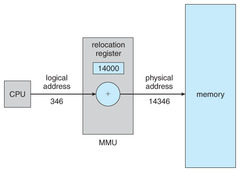

• *Logical address = virtual address* - generated by the CPU. Virtual addresses are used by applications, and are translated to physical addresses when accessing memory. The applications only see virtual addresses. • *Physical address* - the address seen by the memory unit. • The set of logical addresses generated by a program is a *logical address space*. The set of all physical addresses corresponding to these logical addresses is a *physical address space*. • *MMU = memory-management unit* - a hardware device that maps virtual addresses to physical addresses. *Relocation register* = base register for MMU.

question

Dynamic loading (of routines)

answer

Used to obtain better memory-space utilization. All routines are kept on a disk in a relocatable load format. A routine is not loaded until it is called. • particularly useful when large amounts of code are needed to handle infrequently occurring cases, such as error routines. • does not require special support from the OS: - Implemented through program design - OS can help by providing libraries to implement dynamic loading

question

Dynamically linked libraries. Static linking VS dynamic linking.

answer

• *Dynamically linked libraries* are system libraries that are linked to user programs when the programs are run. • *Static linking* - system libraries and program code combined by the loader into the binary program image. Each program on a system must include a copy of its language library (or at least what it needs there) in the executable image → wastes both disk space and main memory. • *Dynamic linking* - linking is postponed until execution time. *Stub* - small piece of code that indicates how to locate the appropriate memory-resident library routine or how to load the library if the routine is not already present. OS checks if routine is in processes' memory address. If not in address space, add to address space. The stub replaces itself with the address of the routine and executes the routine. + easy library updates

question

Swapping

answer

A process can be *swapped* temporarily out of memory to a *backing store* and then brought back into memory (swapped in) for continued execution. + increases degree of multiprogramming (total physical address space of all processes can exceed the real physical memory of the system). *Roll out/in* ~ swapping for priority-based scheduling. • *Standard swapping* - between main memory and backing store. The dispatcher checks if the next process in the ready queue is in memory. If it is not, and there's not enough space, the dispatcher swaps out some process in memory and swaps in the desired process. It then reloads registers and transfers control to the selected process. - too much swapping time, too little execution time → not used in modern operating systems • *Modified versions* of swapping - found on many systems [UNIX, Linux, Windows], typically works in conjunction with *virtual memory*: - Swapping is normally disabled but will start if the amount of free memory falls below a threshold amount. Swapping is halted when the amount of free memory increases. - Another version: swapping portions of processes (to decrease swap time). • *On mobile systems* - swapping in any form is typically not supported (because of flash memory), support *paging*: - iOS - asks applications to voluntarily relinquish allocated memory. - Android - if insufficient free memory is available, it may write application state to flash memory and then terminate the process.

question

Memory protection: the relocation-register scheme

answer

We can prevent a process from accessing memory it does not own by combining limit register and relocation register. The dispatcher loads the relocation and limit register with the correct values as part of the *context switch*. Each logical address must fall within the range specified by the *limit register*. The *MMU* maps the logical address dynamically by adding the value in the *relocation register*. The mapped address is sent to memory. + allows the OS's size to change dynamically (for using transient code).

question

transient operating-system code

answer

Transient operating-system code comes and goes as needed. For example, code for device drivers that are not commonly used.

question

Contiguous memory allocation, 2 methods /kənˈtɪɡjʊəs/

answer

*Contiguous memory allocation*: Each process is contained in a single section of memory that is contiguous (neighbouring) to the section containing the next process. • *Multiple-partition method* (no longer in use): Divide memory into several fixed-sized partitions, each partition may contain exactly 1 process. When a partition is free, a process is selected from the input queue and is loaded into the free partition. • *Variable-partition scheme* (batch environments): The OS keeps a table indicating which parts of memory are available (*holes*) and which are occupied. Memory is allocated to process until,finally, the memory requirements of the next process can not be satisfied (no hole is large enough to hold that process). OS can wait until a large enough block is available or it can skip down the input queue to find a process with smaller memory requirements. Adjacent holes are merged into one. → Dynamic storage-allocation problem

question

Dynamic storage-allocation problem

answer

*Dynamic storage-allocation problem* - how to satisfy a request of size n from a list of free holes: • *First fit* - allocate the first hole that is big enough, starting either at the beginning of the set of holes, or at the location where the previous first-fit search ended. + generally faster - suffer from external fragmentation • *Best fit* - Allocate the smallest hole that is big enough → produces smallest leftover holes. - suffer from external fragmentation • *Worst fit* - Allocate the largest hole → the largest leftover hole may be more useful than the smallest. - worst in terms of time and storage utilization.

question

Internal VS External fragmentation, 50-percent rule

answer

• *Internal fragmentation* - unused memory that is internal to a partition (one of fixed-size blocks). • *External fragmentation* exists when there's enough total memory space to satisfy a request but the available spaces are not contiguous: storage is fragmented into a large number of small holes. Solution: 1) *compaction* - place all free memory in 1 large block by moving programs and data and then updating their base register (possible only if relocation is dynamic and is done at execution time). Can be expensive. 2) *segmentation* or *paging* - noncontiguous logical address space • *50-percent rule*: (first fit) given N allocated blocks, another 0.5 N blocks will be lost to fragmentation.

question

Noncontiguous memory allocation, 2 methods

answer

• *Noncontiguous memory allocation* - a process can be allocated physical memory wherever such memory is available. • *Segmentation* - a logical address space of a process is a collection of segments (stacks, variables, functions...). Does not avoid external fragmentation and the need for compaction. • *Paging* - used in most operating systems - an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory.

question

Segmentation

answer

A logical address space of a process is a collection of segments (stacks, variables, functions...). Each segment has name and length. The address specify *Segment table* maps two-dimensional programmer-defined addresses into one-dimensional physical addresses. Each entry of the table has a *segment base* (start) and a *segment limit* (length). Stored in PCB.

question

Paging

answer

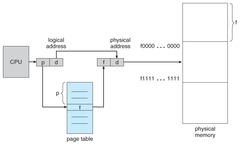

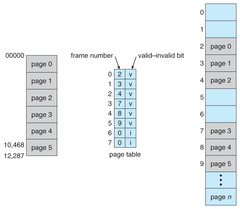

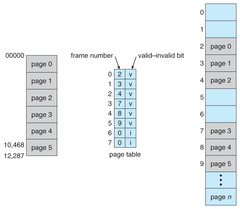

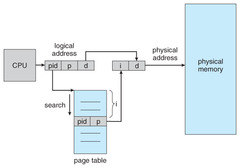

Paging is an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory. • Physical memory is broken into fixed-sized blocks called *frames*. • Logical memory is broken into blocks of the same size called *pages*. The page size (like the frame size) is power of 2, 512 bytes - 1GB per page. • *Address*:

• *Page table* in main memory translates logical addresses to physical. Uses page numbers as indices and contains the base address of each page in physical memory. Stored in PCB. • *Frame table (Free frame list)* has 1 entry for each physical page frame, indicating whether the latter is free or allocated, to which page of which process(es).

question

Page table in memory, 3 implementation techinques

answer

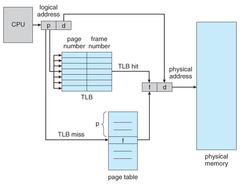

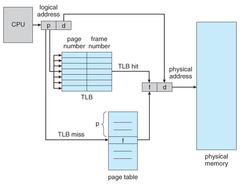

Page table uses page numbers as indices and contains the base address of each page in physical memory. • In simplest case, implemented as a set of dedicated registers, that should be built with a very high-speed logic. The CPU dispatcher reloads these registers. Ok for small page tables (e.g, 256 entries - way too small for modern computers). • Ext. *PTBR = page-table base register* - points to the page table in main memory. Changing page tables requires changing only this one register, reducing context-switch time. - slow (2 memory accesses for a byte) • Ext. *TLB = translation look-aside buffer* - standard solution - small (32-1024 entries), fast-lookup hardware cache.

. 1) Logical page number is presented to the TLB. 1.1) TLB hit - page# is found, its frame# is immediately available and is used to access memory. 1.2) TLB miss - a memory reference to the page table is made (automatically in hardware or via an interrupt to the OS). Page# and frame# are added to the TLB. If full - replacement. 2) When the frame# is obtained, it can be used to access memory.

question

Memory protection in a paged environment (3)

answer

• *ASID = address-space identifier*: Some TLB store ASID in each entry. ASID uniquely identifies each process to provide address-space protection for that process. Otherwise need to flush at every context-switch. When the TLB attempts to resolve virtual page numbers, it ensures that the ASID for the currently running process matches the ASID associated with the virtual page. If the ASID do not match, the attempt is treated as a TLB miss. • *Valid-invalid bit*: Attached to each entry in the page table . - valid: the associated page is in process' logical address space → legal to access - illegal addresses are trapped. • *PTLR = page-table length register*: Hardware, indicates the size of the page table. This value is checked against every logical address to verify that the address is in the valid range for the process. Failure of this test causes an error trap to the OS.

question

Shared pages

answer

*Reentrant code = pure code* - it can be shared. - never changes during execution - heavily used programs (compilers, window systems, run-time libraries..) can be shared

question

3 techniques for structuring the page table

answer

• *Hierarchical paging* - divide the page table into smaller pieces. • *Hashed page tables* - the virtual page number is hashed into a page (hash) table. • *Inverted page tables* - 1 entry for each frame.

question

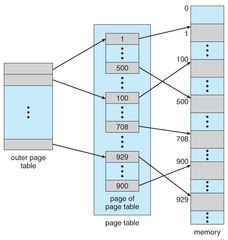

Structure of the Page Table: Hierarchical paging

answer

*Hierarchical paging* - divide the page table into smaller pieces: • Forward-mapped page table (two-level paging algorithm) - page table itself is also paged. • Three-level paging scheme - outer page is mapped • Four-level paging scheme - second-level outer page itself is also mapped... - Increased memory accesses

question

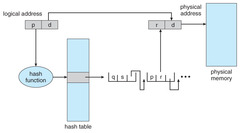

Structure of the Page Table: Hashed page tables

answer

*Hashed page tables* - the virtual page number is hashed into a page (hash) table. Each entry in the hash table contains a linked list of elements that hash to the same location (to handle collisions). Each element contains: 1) the virtual page № 2) the value of the mapped page frame 3) a pointer to the next element in the linked list. *Clustered page tables* - each entry refers to several pages.

question

Structure of the Page Table: Inverted page tables

answer

*Inverted page tables* - all physical pages, 1 entry for each frame. Each entry consists of the virtual address of the page stored in that real memory location, with information about the process that owns the page → only 1 page table in the system, that has only 1 entry for each page of physical memory. Shared pages can be implemented with 1 mapping of a virtual address to the shared physical. + decreases memory needed to store each table - increases the time needed to search the table (solved with hashing and TLB)